Ever since the release of ChatGPT late last year, artificial intelligence has dominated headlines and social-media chatter. The most common reaction to the dramatic recent advances of this technology has been complacency, with many observers seeming to revel in the sheer novelty of AI-generated content like children handed a new toy.

“AI will be even more far-reaching in its impact than the last round of tech disruption.”

This attitude should concern us. Unwarranted optimism about disruptive technology enabled the uncritical embrace of social media and related web 2.0 platforms a decade and a half ago. Today, we are just beginning to reckon with the socially corrosive effects of these applications. There is every reason to believe that AI will be even more far-reaching in its impact than the last round of tech disruption.

What is needed, therefore, is a properly political debate about AI.

There are signs that such a debate is emerging. In the middle of March, an open letter signed by luminaries in tech and academia, including Elon Musk, made news by calling for a six-month moratorium on AI development. (Musk has since been revealed to be working on an AI project of his own at Twitter, raising questions about his commitment to the letter’s position.)

On the political front, Italy in April temporarily banned ChatGPT on privacy grounds. The rest of the European Union, Britain, the United States, and Canada are eyeing similar moves to regulate or restrict AI, citing reasons ranging from its potential use as a tool to commit crime to more typical concerns about online misinformation. Missing from these government responses, however, are the elements of a more comprehensive and realistic stance on AI, one that takes into account the radical destabilizing effects such technologies can be expected to have at the level of political economy, not least massive job destruction.

The Biden administration’s prospective “AI Bill of Rights,” for instance, seeks to regulate AI with an eye to protecting individual rights and civil liberties. For all its ambition, such a framework fails to reckon with the threat of mass economic displacement—and resulting social disruption—posed by AI. Indeed, the danger is likely great enough to warrant a shift in focus away from individual rights and toward broader societal responsibilities. Ditto for the European Union’s proposed “AI Act,” which is different in its emphasis but similarly relaxed about the threat to general economic security.

A more commensurate response to AI would place concerns over the livelihoods and material well-being of citizens at the forefront of the debate, where they might serve as counterpoints to the still-dominant narrative about AI’s supposed inevitability and indispensability.

The challenge of AI calls for the cultivation of virtues and instincts rare in contemporary leaders. An English parliamentarian once declared (in a different context): “The supreme function of statesmanship is to provide against preventable evils. In seeking to do so, it encounters obstacles which are deeply rooted in human nature. One is that by the very order of things such evils are not demonstrable until they have occurred.” What is needed now is a politics of technology consistent with this prudent and properly conservative understanding of statesmanship.

AI is and ought to be a subject of immediate political and moral contestation, no less subordinate than any other issue to overriding human values or to the judgements of public reason as well as public sentiment. Such a position must also go beyond the reactionary longing for a static world and articulate a distinct conception of progress, for neither blithe techno-utopianism nor crude anti-modernism will serve as a sound response.

Over the past several years, the Western world has begun to shift away from a set of axioms that guided politics for decades—variously referred to as neoliberalism, the Washington Consensus, or the Californian Ideology. Paramount among these were a faith in the liberating power of unconstrained markets and technological progress, the conviction that nation-states must give way to an economically integrated global society, and the celebration of change and disruption.

This worldview has entered into crisis in recent years, but an alternative has only been partially and haltingly articulated by insurgent forces of both right and left. Broadly, though, the main tenets of the emerging alternative have come into view. Among the new axioms are that the forces of markets and technology have gone too far; that nation-states have a right and responsibility to insulate their citizens from the externalities of economic integration; and that the ceaseless pursuit of disruption and innovation has exhausted the social fabric and must be balanced with the need for security and stability.

Springing from such desires, this sensibility came to the fore in the 2016 election, when it was said that “Donald Trump supporters wanted to live in the 1950s while Bernie Sanders supporters just wanted to work in the 1950s,” revealing the popular yearning for order and predictability, whether understood in either economic or cultural terms. The neoliberal vision still maintains a stranglehold on major institutions, but the yearning for a different vision is in evidence everywhere.

“AI has the potential to derail the ongoing realignment.”

In political terms, this is why it is vital to confront the disruptive potential of AI: From the potential to automate away many jobs up to the predicted emergence of Artificial General Intelligence or systems with the capacity to acquire contextual understanding and supplant human intelligence altogether (an avowed goal of OpenAI and other tech companies), AI has the potential to derail the ongoing realignment away from the neoliberal dispensation.

As voters and candidates increasingly embrace a suite of post-neoliberal policies—reshoring, industrial policy, and immigration control, to name a few—the unfolding commercialization of advanced AI applications has the potential to negate or reverse nearly all of the gains ordinary citizens stand to make from the emerging paradigm. After all, what will be the point in defending people’s job security from the ravages of neoliberal globalization when it will just as soon be threatened by the even more merciless ravages of technological displacement. In the long term, according to some accounts, AI threatens severe disruption beyond the economic realm—even an existential threat to humanity’s survival.

It is for this reason that all those political forces attempting to move beyond the neoliberal paradigm, whether they call themselves democratic socialists, post-liberals, economic nationalists, or populist conservatives, ought to take a unified stand against the unrestricted dissemination of advanced AI. Policymakers should consider going several steps further than the moratorium idea proposed in the recent open letter, toward a broader indefinite AI ban, accompanied by a social compact premised on the permanent prohibition on the use of advanced AI across multiple industries as a means of obtaining and preserving economic security. After decades of living under a dispensation in which the ever more exacting demands of constant technological change were regularly imposed without appeal (“Learn to code!”), Americans would be justified in clamoring for such a sweeping counterrevolution. But it is the responsibility of leaders to ensure that the outcome is a society that hasn’t rejected technology altogether, but one that has learned to harness it to human ends.

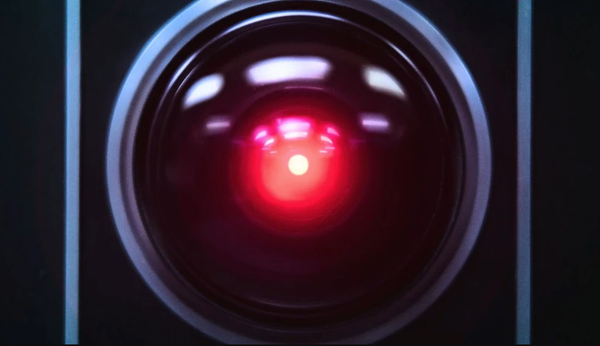

The speculative worlds of science fiction offer a sense of the principles that should animate this aggressive stance. Frank Herbert’s Dune series makes explicit a conceit that other space operas gloss over: For any human stories, human politics, or human social relations to be kept stably in place for an extended period of time, there can’t be a competing form of advanced AI designed to supplant humanity. Ten-thousand years before the events of Dune, the novel’s interstellar society undergoes “the Butlerian Jihad,” a crusade in which humans rise up against “thinking machines” and establish the sacred religious principle that would bind subsequent human cultures: “Thou shalt not make a machine in the likeness of a human mind.”

The concept of “Butlerian Jihad” derives from the work of the Victorian writer Samuel Butler, whose 1863 essay “Darwin Among the Machines” and novel Erewhon, published a decade later, posit scenarios where humanity confronts machines smart enough to replace their creators, leaving only two possible outcomes: the destruction either of humanity or of the machines. Herbert’s 1981 novel, God Emperor of Dune, the fourth of the six Dune novels, recounts the ultra-humanist argument of the struggle as being against “a machine-attitude as much as the machines. … Humans had set those machines to usurp our sense of beauty, our necessary selfdom out of which we make living judgments. Naturally the machines were destroyed.”

“The theme of an existential confrontation with AI pervades the sci-fi genre.”

The theme of an existential confrontation with AI pervades the sci-fi genre; generations of authors have scarcely been able to grapple with the thought of an equal and peaceful long-term coexistence between humans and human-like AI, and many arrived at the same view popularized recently by AI researcher Eliezer Yudkowsky, among others: The arrival of machinic superintelligence would mark the terminal point of human history. As AI firms work on making AGI reality, humanity confronts the scenario Butler prefigured long ago: “We are daily giving them greater power and supplying by all sorts of ingenious contrivances that self-regulating, self-acting power which will be to them what intellect has been to the human race. In the course of ages we shall find ourselves the inferior race.”

At this juncture, the guiding assumption that technological progress will always redound to humanity’s long-term benefit can no longer hold. Instead of worrying about AI spreading misinformation or aligning with the wrong ideologies, we should acknowledge the degradation of human cognitive and creative faculties at the hands of a million convenient applications. This process isn’t a matter of prophetic science fiction: It is already underway.

The liberatory promises of the 1990s, the high point of the Californian Ideology, have led over the past decade and a half to mass epistemic fragmentation and social-cognitive degeneration: ever-shorter attention spans; a tribalized and post-rational society; citizens who are far less literate, creative, or capable of critical and abstract thinking than their forebears; human beings who are lonelier, more atomized, and prone to mental illness; a public discourse mired in the shallow and ephemeral; a nation stuck in a never-ending present—unable to remember its past or to imagine its future. All this was the result of outsourcing the processes of human thought and socialization to the algorithm. The ongoing drive to do the same with more and exponentially greater forms of machine intelligence are the culmination of these civilization-dissolving trends.

The scale of the envisioned transformations this time around is even more radical and all-encompassing: AI copywriters, AI lawyers, AI accountants, AI programmers, AI engineers, AI architects, AI musicians and composers, AI novelists, poets, and visual artists, AI administrators, AI filmmakers and actors, AI teachers, and AI philosophers, AI doctors, and the list goes on (to include even AI friends and AI lovers).

To all the human beings who would be replaced, vague reassurances are made of a sustainable and fulfilling “partnership” between the human and the AI, one that would produce greater and better outputs. Human beings would still be needed to guide the robot’s actions and thought processes, or so we are told (just as some of those displaced workers had to train their own replacements). However, as Butler saw, there can be no real partnership between humans and human-like machines, because the effect over time would be to progressively dilute humans’ acumen and creativity while enhancing the machine’s.

Robots were long associated with the displacement of manual and menial labor, but AI’s recent turn to cognitive and creative labor has redistributed risk toward the “managerial elite” and “creative classes.” Meanwhile, many of those who work with their hands perform tasks that have thus far remained invulnerable to automation for various reasons. Some see the shifting class politics of AI as a chance to get back at the coddled professional-managerial classes, as if to say: “Let AI displace their bullshit jobs.” However, just as the pendulum quickly swung from AI as a blue-collar threat to a white-collar one, there is no way to foretell how future breakthroughs may change the risk distribution yet again and cause the pendulum to swing back. It may be the case that the succeeding generation of AI will have the capacity to wipe out the economic need for human brawn as much as human brain.

Intelligent machines may, within the next decade or so, acquire the needed qualities of dexterity, nimbleness, and sophisticated spatial cognition, to make good on the long-running projections of AI truck drivers, AI construction workers, AI warehouse workers, AI farmers on AI-only farms, AI cooks, bartenders, baristas, and servers: and further down the line, maybe even AI nurses, AI cops, AI soldiers, AI firefighters, AI sailors, and so on across a range of physical work, even if such jobs are among the last to be automated after the white-collar occupations. It is also worth noting the prediction made by Daron Acemoglu and Simon Johnson and that the adverse effect of AI on the US economy won’t just be limited to invalidating Americans’ jobs, but would extend as well to the erosion of Americans’ spending power, “the engine of the US economy.”

Rather than let the emergence of AI be the reason for perpetuating class conflict that risks distracting from the real AI threat, might it not serve instead as an opportunity to forge a new class truce uniting all who stand to lose from the AI revolution against the forces who stand to profit from it? Indeed, the militant Islamic term “jihad” evokes the likely level of emotional intensity and popular passion that AI can be expected to arouse once its effects become fully felt and institutionalized, pointing to the possibility of a redrawing of the political map around the cleavage of pro- and anti-AI.

Last year, Ross Douthat asked if free societies need a “wolf at the door” to thrive. In the past, that part in the West has been played by communism and fascism. In the 21st century, the looming economic and existential threat of AI—even more than the rise of China—may fill the role of a unifying and disciplining external foe to compel the maintenance of social peace.

What would this call for, policy-wise? Beyond a certain point, the further dissemination of generative and other advanced, job-threatening AI in both white- and blue-collar industries would be prohibited through public regulation, or else kept to an absolute minimum. Under these terms, the decision to introduce any disruptive automation would no longer be the sole prerogative of private industry, but would rather be subject to nationwide codes governing what is permissible or not in every industry. Any changes to these codes would have to be enacted at the national level, and the codes should in practice be politically difficult to loosen. The prevention of an AGI would be an explicit objective of this regulatory regime.

The prohibition would take effect past a certain threshold in the technological frontier, the precise determination of which would be a matter for the political leadership to decide in consultation with experts and stakeholders; the exact definitions and classifications of AI in the codes, as well as the exceptions to the rules, would all likewise be subject to political discretion. But a basic rule may be: The more generalized, generative, open-ended, and human-like the AI, the less permissible it ought to be—a distinction very much in the spirit of the Butlerian Jihad, which might take the form of a peaceful, pre-emptive reformation, rather than a violent uprising.

As to what kinds of AI use would be allowed to continue: Owing to their limited and task-driven nature, existing AI applications that are already used in, for instance, data analytics would be permitted. Other exceptions to the ban on most AI development and deployment would include: the use of specialized (objective-bound, non-generative) AI in controlled lab settings to perform complex research-related tasks, such as in medical science or physics experiments; the use of specialized industrial operations within factory settings, which will almost certainly be needed in the course of re-industrialization in a post-globalized era; and in the area of national security, parity with strategic competitors will require development of defense-related AI applications.

There is, of course, much more to the defense and geopolitical implications of AI than can be covered here. Suffice it to say that going forward, the United States would have two risk matrices to manage simultaneously: One is the competition with rivals, notably China, to maintain a competitive strategic and technological edge on AI; and the other is the control and regulation of the defense-related AI itself, a concern that would occupy China’s leaders as well, who are also in the midst of crafting their distinctive approaches to the AI challenge.

Given the risks of a rogue or malfunctioning AI in the defense sector, Washington and Beijing would likely have an interest in restricting their AI defense technologies to limited, specialized, and non-comprehensive applications. The strategic settlement for advanced AI may end up looking like the historic one for nuclear weapons, with the great powers largely regulating risk among themselves while presiding over a global nonproliferation regime. Beyond the military sphere, the idea of international standards on AI should logically extend to trade and economics, as well.

The adoption of such a qualified approach to technology would place America—and the world— on a markedly different path from the reflexive techno-optimism that has reigned for decades. But if the nation-state assumes such power over what can and can’t be done technologically, wouldn’t it amount to a repudiation of the idea of progress? Many on the anti-modern “degrowth” left and “trad” right are now advocating exactly this. There is, however, another view, which transcends the choice between inexorable progress and reactionary anti-progress.

The Californian Ideology appealed to Jeffersonian ideals and imagery—the rugged individual, the restless frontier, the decentralist ethos—to legitimize the rush to technological saturation and global integration. Like good Jeffersonians, they invoked the optimistic, romantic view of politics and economics, which also allowed them to abdicate responsibility over the negative externalities of their project and to attribute it all, instead, to the unquestionable, “spontaneous” will of the market.

A different view of progress may be found in the properly conservative Federalist and Whig tradition of US history, stretching from Alexander Hamilton through Henry Clay to the two Roosevelts, which unfortunately has almost no recognizable heirs in US politics today (least of all in the schizophrenic sect that calls itself “the conservative movement”). This tradition sought the pursuit of growth and order together. It reserved a place for responsible and conscientious elites to guide national development, according to them the right and responsibility to decide on the quality as well as the quantity of progress that’s right for a society at any given time.

“What would ‘conservative modernization’ look like in America today?”

What would “conservative modernization” look like in America today? It would consummate the new, anti-neoliberal consensus by supplanting neoliberal globalization with national reindustrialization as the dominant paradigm, while instituting the regulations on AI proposed above. The death of “bullshit jobs” may instead be accomplished once managerial elites are made to turn their cognitive and organizational abilities to the serious task of building a new industrial economy, thereby also enabling their blue-collar compatriots to find work in the manifold forms of physical and manual labor that such an economy would still require. The cry that proscribing AI would limit overall productivity might be true, but it must be met with the reply that the price to be paid in terms of mass displacement and disorder, not to mention a society-wide crisis of meaning, is much too steep to be considered.

To be sure, an anti-modern rejection of progress itself would cause further material regression, fragmentation, and erosion of state capacity, ultimately ceding more power to unaccountable private actors and hostile foreign powers. In line with the older conservative tradition, leaders of the realignment must recognize the necessity of progress no less than the need to protect society from what Melville called “the impieties of progress.” The adoption of a conservative view of progress and modernization, defined against both the neoliberal and the reactionary positions, would guard against that possibility. The thrust of this version of (re-)modernization would be in the rapid expansion in the economy of atoms to be accompanied by a corresponding limitation and contraction in the economy of bits, signifying a reversal of neoliberal trends.

Beyond all of the immediate economic and political challenges posed by AI, there is also the still somewhat distant prospect of post-human consciousness, whether arrived at through artificial intelligence or biological modification or both. According to some influential futurists, such a prospect becomes inevitable once the scale of events is measured in centuries, rather than decades. By putting the AI revolution in a deep freeze, the arrangements proposed here would buy time for humanity to ensure that such a transition is peaceful and humane. This can’t, of course, happen if AI is simply allowed to rip through society and the economy.

Though stemming from material and existential concerns, a prohibition against AI may also have a salutary and revivifying effect on human culture. More than averting an apocalypse, pushing back against the further technological saturation of society could trigger a deeper reaction against the cultural and ideological status quo, one that would aim at rolling back the years of technology-induced creative, intellectual, and civilizational stultification.

In the universe of Dune, the Butlerian Jihad opens up rich new avenues for the radical expansion and redefinition of human capabilities once thinking machines were outlawed. The various schools of the Dune universe, like the Mentats and the Spacing Guild, fulfill the complex cognitive functions once reserved for machines, aided by fantastical chemical stimulants. In the novels, the protagonists, Paul Atriedes, and his son, Leto II, gain heightened powers of prescience, illustrating what Herbert imagined as the Olympian apex of human development once totally decoupled from dependence on thinking machines.

“A revolt against AI … could foster a newfound reverence for human thought and creativity.”

Analogously, a revolt against AI in the real world could foster a newfound reverence for human thought and creativity. Individuals across diverse fields could be inspired to reinhabit the slow, organic, and contemplative realms of thinking and imagination that have been largely vacated in our digitally warped cultural environment. Writers and artists of all kinds may now be compelled to create by their own hands all the books, films, plays, music, treatises, philosophies, works of art, and cultural wealth that the rise of generative AI would have otherwise rendered obsolete, spurring a new search for vitality and originality in the arts—to recapture what Herbert’s Leto described as that “sense of beauty” and “necessary selfdom” that animate human enterprise.

By the same token, the humanities, having come so close to the prospect of virtual invalidation in this scenario, could be shaken out of its current state of mediocrity and torpor: to be redirected away from the moral and epistemic fragmentation of the postmodern era, and pointed back in the direction of that antiquated notion of a common humanity, the rediscovery of which may serve as the unifying theme of a new cultural renaissance. The human spirit may yet grasp a new lease on life, culturally and spiritually, no less than materially and economically.